Research

Welcome to the research division of the University of South Florida's Center for Assistive, Rehabilitation and Robotics Technologies (CARRT). Our research groups and laboratories incorporate innovative theory and state-of-the-art facilities to develop, analyze and test cutting edge assistive and rehabilitation robotics technologies.

Our faculty, staff, graduate and undergraduate students pursue a wide range of projects all focused on maintaining and enhancing the lives of people with disabilities.

You can look at various videos of CARRT research and service projects through the following link: CARRT YouTube Channel

Projects

Active Projects

Robotic Teleoperation to Autonomy through Learning

Abstract

The goal of this research is to create a human-robot collaborative system that learns from sensor-assisted teleoperation to reach the maximum possible autonomy requiring minimal user input. It is assumed that the user while unable to do a task manually, can use a haptic interface to perform it in teleoperation. This system will utilize (a) the cognitive and perceptual abilities of an individual in a wheelchair with significantly limited motor skills, AND (b) the superior dexterity, range of motion, and grasping power along with intention recognition and learning capability of a robot. Our system will seek autonomy by continuously learning from sensor-assisted and imprecise teleoperation by a human. Initially, the workload will be distributed between the robot and human based on their abilities to perform ADL/IADL related basic actions in (i) autonomous or (ii) sensor assisted teleoperation modes. Any tasks or portions of the tasks that can be performed autonomously will be done in autonomous mode and the remaining tasks or subtasks will be done in sensor-assisted teleoperation mode. The human-robot collaborative system will gradually learn to perform these tasks autonomously in the long run.

Motivation

Individuals with diminished physical capabilities must often rely on assistants to perform Activities of Daily Living (ADL) or other Instrumental Activities of Daily Living (IADL). Even though lacking or severely limited in gross and fine motor skills, such individuals are often in possession of sound cognitive and perceptual abilities. In this work, we will focus on individuals with Muscular Dystrophy (MD), Multiple Sclerosis (MS), and Spinal Cord Injuries (SCI levels C5 to C7).

Live Drone Control using the Brain-Machine Interface

ABSTRACT

The purpose of this project is to utilize active Steady-State Visual Evoked Potential (SSVEP)-based Brain Machine Interface (BMI) system to extract the signal coming from the pilot’s brain and enhance engagement and attention to critical flight commands needed during flight. A method will be developed to read the BMI-SSVEP signal, digitize it and use it for controlling the spatial position of a target destination for an active drone to follow. Emotiv Epoch BMI headset is used to extract the Electroencephalography (EEG) signal from the brain and use it to provide commands to the flight control system and display visual feedback to the pilot. The visual feedback will be based on real-time flight data and target location.

Feedback-based Stroke Rehabilitation Using Multiple Simultaneous Therapies

DESCRIPTION

Walking is a fundamental daily activity, and independent walking is a primary goal for individuals with a stroke. However, less than 22% of people with a stroke are able to regain sufficient functional walking to be considered independent community ambulators. Many individuals with a stroke have asymmetric walking patterns (e.g., different step lengths with each leg) that reduce walking efficiency, decrease walking speed, and increase the likelihood of injuries and falls. To get a feel for asymmetric gait, one can try wearing a thick-soled heavy shoe on one foot and go barefoot on the other; then try to walk with the same step length on each foot while maintaining a consistent timing between placing each foot on the ground. This simple perturbation will likely modify the gait such that the person has to push harder with one leg during walking. In contrast to the one perturbation in this example, each of the millions of individuals with a stroke have multiple asymmetric changes that compound the detrimental effects. While there are many different therapies to help individuals regain their walking ability, disabilities are unique and often need a solution specific to each person. This project will use a combination of existing therapies applied simultaneously to generate a user-specific therapy that adapts to the individual's needs. This project focuses on gait rehabilitation after a stroke but may lead to benefits in therapies for gait recovery in individuals with lower limb amputations, hemiparetic cerebral palsy, and other gait impairments.

This project addresses research questions such as: (i) what is the level of the symmetry that can potentially be targeted for a patient that has inherent asymmetry in functionality; (ii) what factors influence the perception of a symmetric gait; (iii) how to model the interactions among multiple therapies used for rehabilitation. To answer these questions, the research team will first understand how the effects from two different therapies combine. Based on the results of multiple pairs of simultaneous therapies, the second phase will use real-time feedback, based on the measured gait to optimize the output from two or more individual therapies. Controlling multiple therapies should allow for the control of multiple gait parameters that can change the gait pattern in a user-specific way. Since individuals with a stroke inherently have different force and motion capabilities on each leg, perfect symmetry may not be possible. Throughout this project, experiments will determine the bounds of acceptable asymmetry from a visual perspective. This perception will help understand what clinical physical therapists perceive about gait and help direct their attention to important parameters, particularly those that both have a large impact on gait function but are not easily perceived. Although the resulting gait may have some degree of asymmetry in all measures, the gait pattern will likely be less visually noticeable and meet the functional walking goals of individuals with asymmetric impairments.

Enhancement & Verification of Countermeasure Analysis Tools for Human Exploration Missions

ABSTRACT

As spaceflight missions increase in both duration and complexity, responses to environmental changes become a crucial focus for astronaut health and success. For long-term human spaceflight to the Moon or Mars, new smaller exercise equipment used for countermeasures for issues such as bone density loss, muscle atrophy and decreased aerobic capacity will need to be designed. Paired with these exercise devices, a vibration isolation system (VIS) will also need to be developed in order to prevent cyclic exercise forces from impacting the space vehicle. The proposed project seeks to study the human response (kinematics and kinetics) to ground perturbations in order to inform computational modeling of human exercise used to determine vibration isolation system (VIS) parameters and design considerations for long term human space flight.

Biomechanical Analysis for Enhancement of Lunar Surface Operations Modeling

ABSTRACT

In an environment of gravity levels so low that the general orientation of the body can be easily altered, astronauts face enough challenges, and often training is necessary for preparation in such environments. From past lunar missions, it has been observed that there is the potential for astronauts to fall due to this alteration caused by the drop of gravity to about 17% of what Earth’s gravity is, as humans can properly orient themselves with at least 15% of the gravitational force present on Earth. Due the increased struggle to maintain balance in addition to other factors such as lack of proper nutrition, muscle and bone lose, a shift in body fluid distribution, and inadequate amounts of sleeps, astronauts often struggle to complete tasks due to high fatigue levels, even with the amounts of countermeasures in place. In order to assess the feasibility of these lunar tasks, motion and force plate data can be collected using the Vicon Motion Capture system and analyzed for enhancement to avoid increased exhaustion. The motion capture system allows for a clear view of the body’s mechanisms as different tasks such as walking, running, hopping, digging, lifting, and climbing stairs are completed.

Body-as-a-Network Monitoring and Alert System

ABSTRACT

The purpose this project is to develop a body-as-a-network monitoring and alert system. The project will determine if measuring changes in biosignals and emotions can be used to create an alert system to help Service Members with situational awareness and emergency decision making in the field. The study will create combat simulations using the CAREN virtual reality system that will require the user to make quick decisions while measuring biosignals to determine which wearable sensors would be feasible as an alert system and communication device to improve situational awareness. Warnings, triggered by changes in biosignals, can be sent to other team members via text, auditory cues, tactile or blinking lights prompting them to heighten their focus.

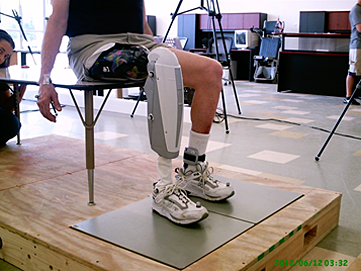

Monitoring Prosthetics and Orthotic Function in the Community

ABSTRACT

There is a need to better understand how lower limb prosthesis or orthosis users function in the community outside the lab to inform clinical decisions. The goal of this project is to verify a portable monitoring system’s ability to measure prosthetic and orthotic function in the community and in return to duty situations. This project will address the DOD focus area of prosthetic and orthotic device function by testing a portable monitoring system that can be used in community and military relevant activities to analyze variables that are relevant to measuring patient outcomes and prosthetic/orthotic use outside of a laboratory or clinic.

Past Projects

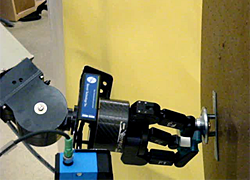

WMRA Robotic Gripper

Abstract

This paper presents a low-cost and self-contained robotic gripper that can be integrated into various robotic systems. This gripper can be used to accomplish a wide array of object manipulation tasks, made easy by its design and features a wide array of sensors that can be used to help accomplish these tasks. Furthermore the gripper has been made to be compatible with many robotic systems.

Motivation

Many existing grippers used in robotics today have varying capabilities which only allow them to perform certain tasks or grab certain objects for manipulation. These grippers may lack additional features (i.e. cameras or sensors) that allow them to better perform tasks. Additionally, many of these robotic grippers will only interface with the system they were designed for. To get these features that we needed for our projects, along with the flexibility to migrate the gripper from system to system, a new gripper had to be made.

Design Features

The gripper features a small 24v DC motor with an encoder that can be used to read the position and speed of the gripper. It also has a slip clutch to prevent over extending of the gripper. The unique cupped design of the gripper, emulates a human hand, allowing for more accurate grasping. Furthermore, the gripper contains a camera, and a distance sensor for finding the objects' position. Finally, the gripper has a current sensor that can be used to shut off the gripper if the force on the gripper exceeds a certain threshold for a given object.

Design Features

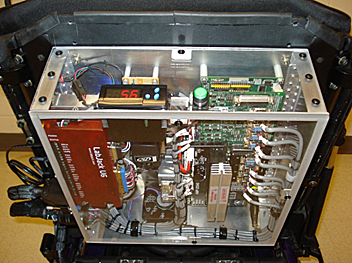

Packaging

In order for this gripper to be able to interface with other robotic systems without modification to the design of the gripper it was necessary to bundle all of the required components into a single control box. This control box consists of the microcontroller, motor controller, voltage regulators, and current sensing equipment required for the system to function. Additionally, the microcontroller allows for interfacing with the gripper via Ethernet, Wi-Fi, Bluetooth, or

USB. The Gripper assembly is powered via an external Power Supply (battery or DC adapter). In order to interface with the various added components in this gripper, a full software library was written in C++ that allows users to control the gripper, grab sensor information, and view the camera, without any additional programming. This library is cross-platform and can be used on mobile devices.

Operation

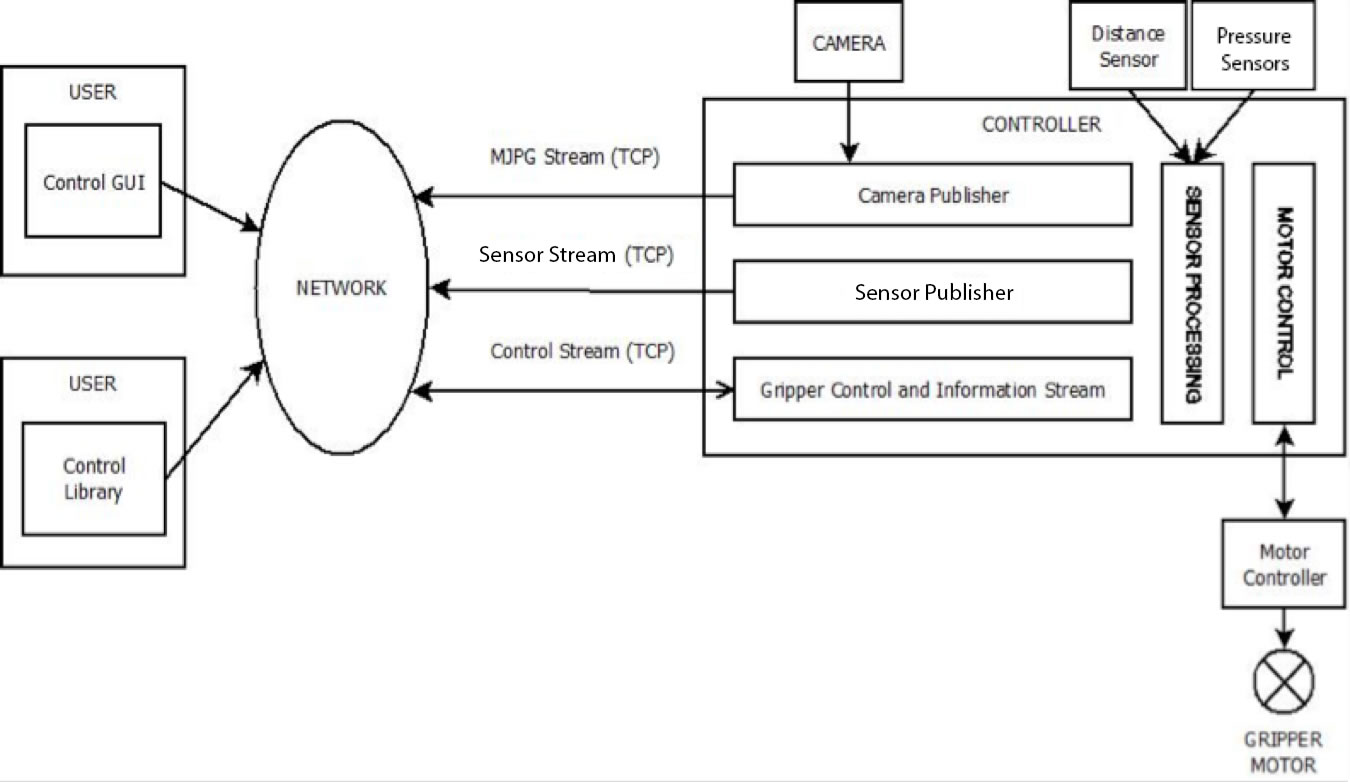

Above: The gripper control overview showing the flow of control and sensory information when using a TCP/IP based interface within the gripper system, including the transmission with the users.

The gripper system has several different interfaces through which it can be controlled. The gripper can either be controlled internally, in which the control programs are stored and run on the gripper's controller automatically, or remotely, in which the user is controlling the gripper from another device. The gripper system supports remote operation via Ethernet, Wi-Fi, and Bluetooth, with expansion to other communication formats available via the gripper controller's onboard USB ports.

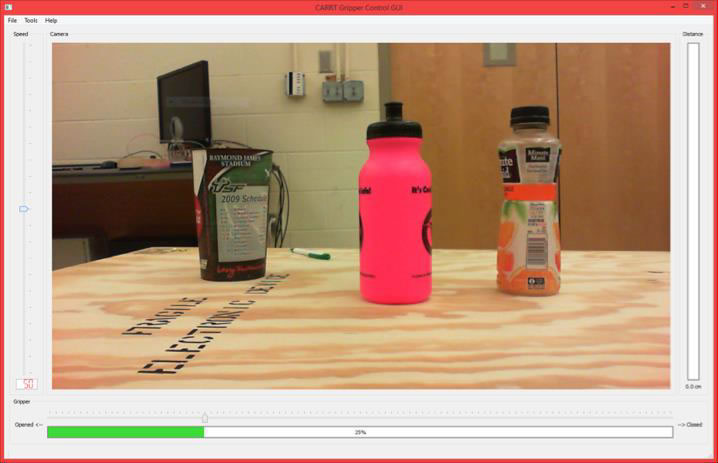

Included with the gripper system is a custom Gripper Operation Program that grants the user easy operation of the gripper from a computer. The Gripper Operation Program allows the user to control the openness / closeness of the gripper via a simple slider bar, as well as the speed at which the gripper opens / closes. Additionally, the Operation Program allows the user to see all of the sensor information coming from the gripper, including the distance sensor, pressure

sensors, and camera. The Operation Program also allows the user to set pressure cutoff limits. These cutoff limits force the gripper to stop closing / opening when a pressure threshold has been reached. This feature prevents the gripper from exerting too much / too little force on an object. The Operation Program is available for Windows, Mac OS X, and Linux.

Above: The Gripper Operation Program showing the camera view, gripper position controls, gripper speed adjustment, and distance sensor information

Results

While the all-in-one control system for the WMRA Gripper was still being developed, the Gripper itself has been used in the Wheelchair Mounted Robotic Arm (WMRA) as the system's primary gripper. Using this Gripper, the WMRA has successfully manipulated objects of different classifications including bottles, cups, markers, etc. With the addition of the current feedback and shutoff, the Gripper should gain the ability to grasp objects that can be crushed by the gripper. Finally, the high resolution and high frame rate of the camera would better allow for the usage of computer vision algorithms to perform object detection and classification of the target objects, and more accurate grasping.

Conclusion

This gripper is an effective, fully featured, and cost effective device that can be used in a wide array of robotic manipulation tasks. Additionally, the gripper can be added to existing robotic systems with little (if any) modification to the existing system or the gripper.

Neural Network Speech Recognition

ABSTRACT

This work focuses on the research related to enabling individuals with speech impairment to use speech-to-text software to recognize and dictate their speech. Automatic Speech Recognition (ASR) tends to be a challenging problem for researchers because of the wide range of speech variability. Some of the variabilities include different accents, pronunciations, speeds, volumes, etc. It is very difficult to train an end-to-end speech recognition model on data with speech impediment due to the lack of large enough datasets, and the difficulty of generalizing a speech disorder pattern on all users with speech impediments. This work highlights the different techniques used in deep learning to achieve ASR and how it can be modified to recognize and dictate speech from individuals with speech impediments.

NETWORKS ARCHITECTURE AND EDIT DISTANCE

The project is split into three consecutive processes; ASR to phonetic transcription, edit distance and language model. The ASR is the most challenging due to the complexity of the neural network architecture and the preprocessing involved. We apply Mel-Frequency Cepstrum Coefficients (MFCC) to each audio file which results in 13 coefficients for each frame. The labels (text matching the audio) is converted to phonemes using the CMU arpabet phonetic dictionary. The Network is trained using the MFCC coefficients as inputs and phonemes’ IDs as outputs. The Network architecture implemented is a Bidirectional Recurrent Deep Neural Network (BRDNN – fig.1), it consists of 2 LSTM cells (one in each direction) with 100 hidden blocks in each direction. The network is made deep by stacking two more layers, which results in a 3 layers network in depth. Two fully connected layers were attached to the output of the recurrent network with 128 hidden units in each. This architecture resulted in a 38.5% LER on the Test set.

Figure 1: Deep Bidirectional LSTM

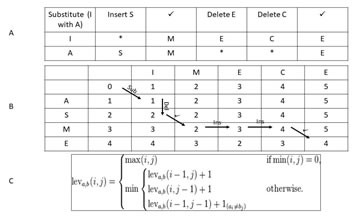

Levenshtein edit distance (fig. 2) is used to generate potential words from phonemes. Edit distance of one means a maximum change of one phoneme is allowed, edit distance of two means a change of one or two phonemes is allowed when generating the potential words, and so on. These changes can be inserts, deletes or replacements. The language model uses the potential words to generate sentences with the most semantic meaning. The language model is another recurrent neural network model trained on full sentences. The model outputs the probability of a word occurring after a given word or sentence. It is simpler than the main speech recognition model because it is not bidirectional and not as deep. The language model uses beam search decoding to find the best sentences.

Figure 2: A) Edit operations B) Dynamic programming of Edit Distance C) Algorithm from Wikipedia |

||

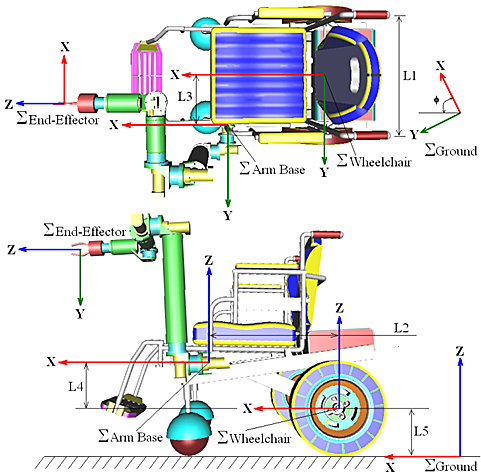

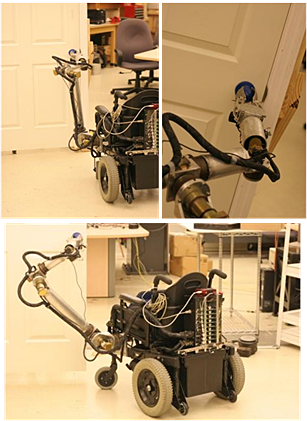

Wheelchair Mounted Robotic Arm

WMRA is a project that combines a wheelchair's mobility control and a 7-joint robotic arm's manipulation control in a single control mechanism that allows people with disabilities to do many activities of daily living (ADL) with minimum or no assistance, some of these activities and tasks are otherwise hard or impossible for people with disabilities to accomplish.

Hardware Design and Control

- Seven motorized revolute joints on the arm; can reach any position in space within its range of motion.

- Modular arm; to change the workspace of the arm to accommodate individual needs.

- Custom designed gripper to pick up a range of objects from a sheet of paper to a soccer ball. Adjustable claw tilt to accommodate different shapes.

- Servo control boards and motors.

- Control system combines mobility and manipulation, in a smart 9-degree-of-freedom control algorithm.

- Redundancy resolution is used for increased maneuver-ability, to eliminate singularities, and avoid joint limits and obstacles.

- Weight and Payload: –24 lbs / 8.5 lbs

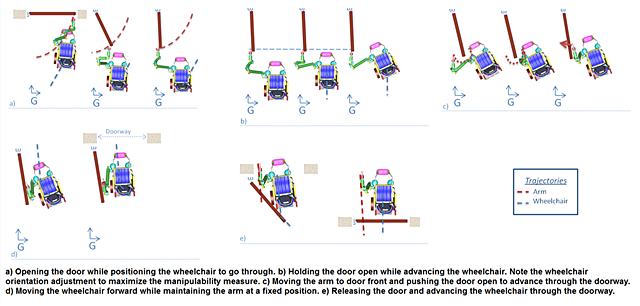

Task-Oriented Control of the WMRA System

- Optimization methods are used to fulfill two separate trajectories simultaneously, one for the gripper and the other for the wheelchair.

- Having two separate trajectories can be utilized to achieve various ADL tasks, such as opening a spring-loaded door inwards and going through the door while maintaining the pose of the gripper holding the door knob.

- Redundancy was resolved to maximize the manipulability measure during the task performance. The wheelchair motion was used to compensate for the decrease in manipulability measure of the arm while going through the door.

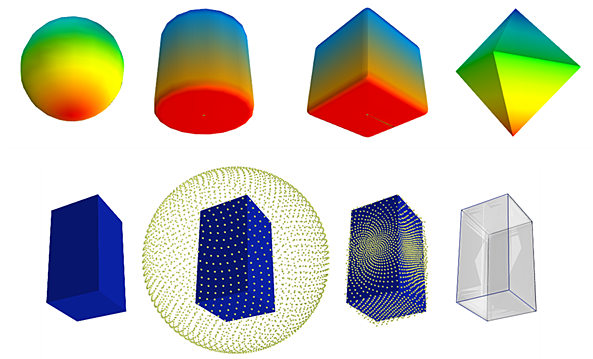

Object shape and pose recovery of unknown objects

- Reconstruct the shape and pose of a novel object to a sufficient degree of accuracy such that it permits grasp and manipulation planning.

- This is implemented two different ways: capture three images of the object and generate a silhouette and a point cloud that approximates the surface of the object. Then improve the approximation by fitting a superquadric shape to the points.

- Another method to do this is using Saliency Detection. Effective shapes can be extracted from a complex object image. These shapes can then be reconstructed and used for grasping.

- Microsoft Kinect is used to get the image and 3D information of objects of interest.

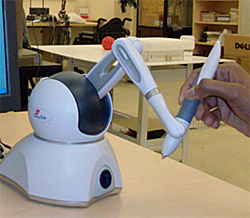

Motion scaling and grasping

- Grasps which involve a human and a robot working together are more robust than those which are either autonomous or teleoperated.

- Intention based assistance to the human teleoperating the arm in the form of motion scaling can enhance the arm's usability and the user's capabilities.

- Using Kinect to extract basic shape and pose information of objects, a pre-shape configuration for grasping is determined through intention estimation algorithms (Hidden-Markov Model).

- Motion assistance is provided to the user based on the pre-shape configuration by scaling up the user's motion towards that configuration and scaling down the user's motion against that configuration.

- Fine adjustment of the gripper's pose is done using simple geometrical methods and finally the grasp is realized.

- Omni Phantom Haptic device from Sensable Technologies is used as the master device and WMRA as the remote robot.

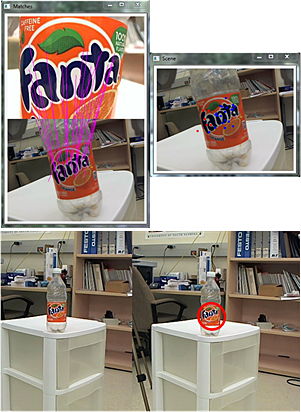

Mobility & Manipulation using Visual Servoing Control

- Image-based visual servoing (IBVS) approach with scale-invariant feature transform (SIFT) was used for combined autonomously control of mobility and manipulation for the 9-DoF WMRA system.

- Physical implementation with a "Go to and Pick Up" task and a "Go to and Open the Door" task was developed and presented.

- A Logitech C910 USB webcam mounted in eye in hand configuration on the end effector was used to capture 30 fps video stream of the environment with the object in sight.

- For estimating the depth distance from the camera to the goal object, a Sharp GP2Y0A21YK infrared proximity sensor mounted just beneath the camera was utilized.

- Users operate the system with a laptop using a GUI developed for the application.

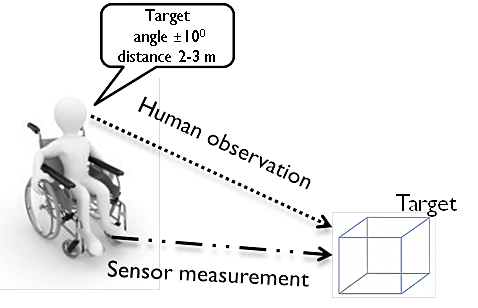

Autonomous Navigation, Simultaneous Localization and Mapping (SLAM)

- Simultaneous localization and mapping (SLAM) algorithm is used to localize the wheelchair in an unstructured environment, and to build a dynamically changing map of the environment.

- Motion planning and navigation can be optimized based on SLAM data to reach a moving target and achieve a task while avoiding moving obstacles.

- Human perception and observations can be integrated to the algorithm to achieve better results and more accurate task implementation.

- Microsoft Kinect is utilized to capture the 3D information of the environment.

- Extended Kalman Filter is used for localization.

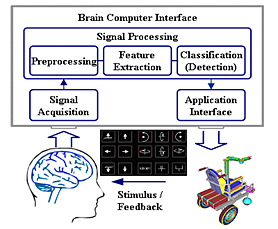

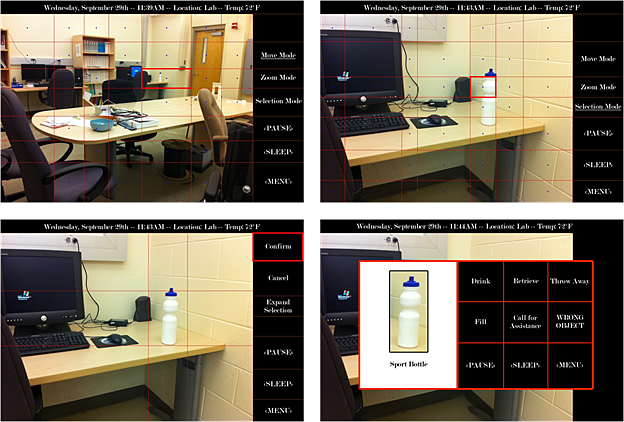

Vision-based system for selecting objects and tasks using a Brain-Computer Interface (BCI)

- Using the Brain-Computer Interface can be very tiring and lengthy in order to communicate choices to the system. Vision-based algorithm is being developed to make it easier to use any user interface system to control WMRA, including the BCI.

- This interface is useful for patients who are completely locked-in and are unable to use any body parts for doing their daily activities.

- In order for this selection system to be operable by BCI, the scene is divided into a grid, like that used in the P300 Speller.

- The user needs to select the cell whose blue dot falls on the object of interest.

- The cells are flashed in a random order. A cap with electrodes worn by the user detects P300 Electroencephalography (EEG) signals in the users' brain when an Even Related Potential (ERP) is generated.

- An ERP is generated based on the Oddball Paradigm in which a signal occurs when an odd event occurs from a sequence of events. The odd event in this case is the flashing of the cell of interest out of the random flashing.

- The BCI2000 program detects the cell that the user is focusing his/her attention on and then by using a Flood-fill algorithm and edge detection, segments the object.

- Once the object is selected, it runs through an object recognition algorithm to recognize the object and create a list of possible tasks to do with the object. This list is displayed to the user and interfaced with the BCI.

- The program is being developed to include functions for zooming and moving the robot to allow for more precise selection.

- The gridlines are generated intelligently using edge detection output, to increase the likelihood of selection in a single iteration.

- This system will also be operable by a myriad of other user input devices, including eye tracking, voice control, and touch-screen.

- Previous implementations included directional control of the WMRA system using BCI for teleoperation. This implementation includes the vision based algorithm that is integrated to the BCI for autonomous motion.

Experimental evaluation of WMRA devices

- JACO arm from Kinova and iARM from Exact Dynamics are two commercially available wheelchair-mounted robotic arms (WMRAs) internationally.

- Experimental evaluation of commercially available WMRAs in a controlled test environment has been conducted to study the efficacy of using such devices.

- The goal was to quantitatively compare each device through a standardized testing protocol. The study produced theoretical manipulability measurements as well as efficacy ratings of each device based on Denavit-Hartenberg kinematic parameters and physical testing, respectively.

- Both the manipulator and control devices of WMRA systems were evaluated. The iARM was found to be more effective than the JACO arm based on kinematic analysis. Despite this, the JACO arm was shown to be more effective than the iARM system in three of four experimental tasks.

- Effective design features were brought to light with these results. The study and its procedures may serve as a source of quantitative and qualitative data for the commercially available WMRAs.

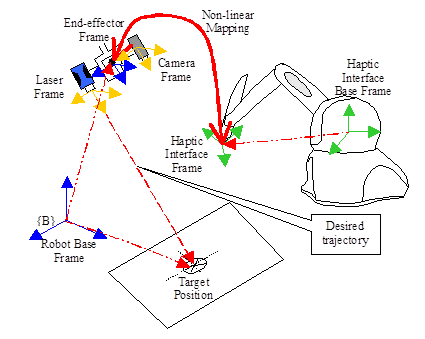

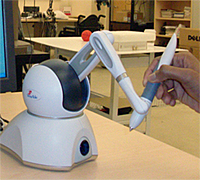

Laser Assisted Real-Time and Scaled Telerobotic Manipulator Control with Haptic Feedback for Activities of Daily Living

This is a novel method of using laser data to generate trajectories and virtual constraints in real time that assist the user teleoperating a remote arm to execute tasks in a remote unstructured environment.

The laser also helps the user to make high-level decisions such as selecting target objects by pointing the laser at them. The trajectories generated by the laser enable autonomous control of the remote arm and the virtual constraints enable scaled teleoperation and virtual fixtures based teleoperation. The assistance to the user in scaled and virtual fixture based teleoperation modes is either based on position feedback or force feedback to the master. The user has the option of choosing a velocity control mode in teleoperation in which the speed of the remote arm is proportional to the displacement of the master from its initial position. At any point, the user has the option of choosing a suitable control mode after locating targets with the laser. The various control modes have been compared with each other, and time and accuracy based results have been presented for a 'pick and place' task carried out by three healthy subjects. The system is intended to assist users with disabilities to carry out their ADLs (Activities of Daily Living) but can also be used for other applications involving teleoperation of a manipulator. The system is PC based with multithreaded programming strategies for Real Time arm control and the controller is implemented on QNX.

Adaptive Recreation

Through collaboration with the School of Theatre & Dance and the School of Physical Therapy and Rehabilitation Sciences, adaptive recreational devices have been designed and developed to assist people with disabilities and amputees in various recreational activities, including dance and exercise.

Hands-Free Wheelchair

A completely hands-free operated wheelchair that responds to one's body motion was developed primarily for use in the performing arts; however its unique user interface offers endless possibilities in the fields of assistive devices for daily activities and rehabilitation. This powered wheelchair modification provides greater social interaction possibilities, increases one's independence, and advances the state of the art of sports and recreation, as well as assistive and rehabilitative technologies overall. Various prototypes of this project have been developed, including a mechanical design and a sensor-based design. A new design is underway that utilizes an iPod or other hand held devices to control the wheelchair using the gyroscope capabilities of these devices.

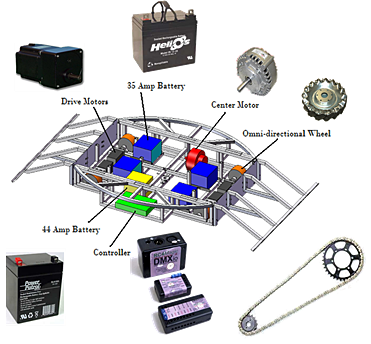

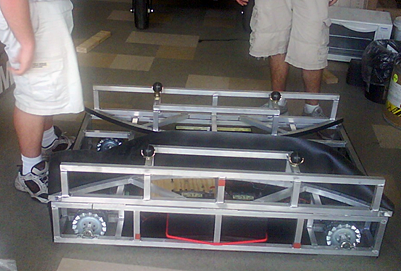

Omni-directional Rotating Platform for Dance

This project involves the design, development, and testing of a stand-alone Omni-directional mobile dance platform with an independently rotating top. A robust, remote controlled, compact, transportable, and inexpensive moving platform with a rotating top is designed. This platform adds an additional choreographic element to create a unique style of dancing, which involves the use of a variety of mobility devices and performers including dancers with disabilities. The platform is designed to hold up to five-hundred pounds with an independently rotating top while the base moves forward/backward, sideways, or diagonally using Omni-directional wheels. The existing design has a removable top surface, folding wing sections to collapse the unit down to fit through an average size doorway, and detachable ramp ends for wheelchair access. The top of the platform is driven by a compact gear train designed to deliver maximum torque within the limited space.

Terminal Devices for Prostheses Users

Various terminal devices have been developed to assist prostheses users in their recreational activities. These terminal devices are designed to improve the user's capabilities to play Golf, kayaking, rock climbing and other activities.

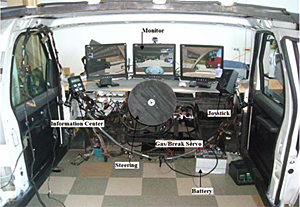

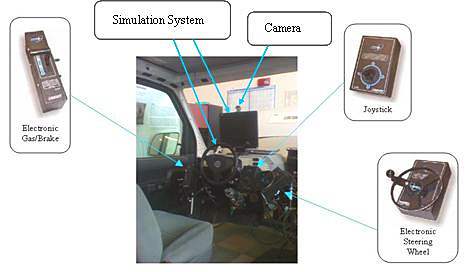

Adaptive Driving Simulation

A driver training system that combines a hand controlled modified van with a driving simulator has been developed. This system enables individuals to overcome transportation barriers that interfere with employment opportunities or access to daily activities. With the combination of AEVIT (Advanced Electronic Vehicle Interface Technology) and virtual reality driving simulator known as SSI (Simulator Systems International), an environment is created where a user can have different interfaces to learn to operate a real time motor vehicle. Various adaptive controls are integrated to the system. Analysis of various controls with various user abilities can be used to recommend specific devices and to train users in the virtual environment before training on their modified vehicle.

Asymmetric Passive Dynamic Walkers

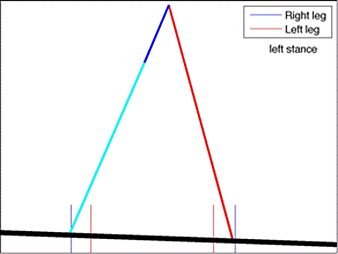

Passive dynamic walkers (PDW) are devices that are able to walk down a slope without any active feedback using gravity as the only energy source. In this research, we are examining asymmetric walking in a similar, but different approach, as the above Gait Enhancing Mobile Shoe Project. Typically, PDWs have used symmetric walkers (i.e., same masses and lengths on each side), which generally results in symmetric gaits. However, individuals with a stroke and individuals that wear a prosthetic do not have physical symmetry between both sides of their body. By changing one physical parameter on one of the two legs in the PDW, we can show a number of stable asymmetric gait patterns where one leg has a consistenty different step length than the other, as shown on the right. The figure on the right has the right knee moved up the leg. This asymmetric model of walking will enable us to test the effect of different physical changes on how individuals will alter their gait.

Bimanual Symmetric Motions

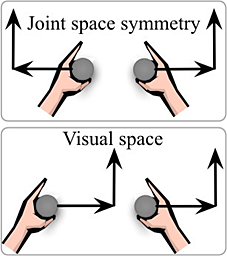

Many daily tasks require that a person simultaneously use both hands, such as opening the lid on a jar or moving a large book. Such bimanual tasks are difficult for people who have a stroke, but the tight neural coupling across the body can potentially allow individuals to self-rehabilitate by physically coupling their hands. To examine potential methods for robot-assisted bimanual rehabilitation, we are performing haptic tracking experiments where individuals experience a trajectory on one hand and attempt to recreate it with their other hand. Despite the physical symmetries, the results show that joint space motions are more difficult to achieve than motions in the visually centered space.

Gait Enhancing Mobile Shoe

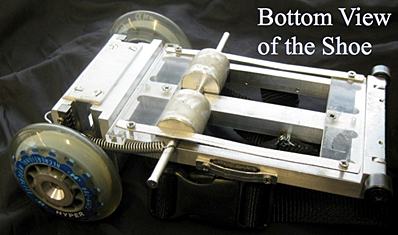

Certain types of central nervous system damage, such as stroke, can cause an asymmetric walking gait. One rehabilitation method uses a split-belt treadmill to help rehabilitate impaired individuals. The split-belt treadmill causes each leg to move at a different speed while in contact with the ground. The split-belt treadmill has been shown to help rehabilitate walking impaired individuals on the treadmill, but there is one distinct drawback; the corrected gait does not transfer well to walking over ground. To increase the gait transference to walking over ground, I designed and built a passive shoe that admits a motion similar to that felt when walking on a split-belt treadmill. The gait enhancing mobile shoe (GEMS) alters the wearer's gait by causing one foot to move backward during the stance phase while walking over ground. No external power is required since the shoe mechanically converts the wearer's downward and horizontal forces into a backward motion. This shoe allows a patient to walk over ground while experiencing the same gait altering effects as felt on a split-belt treadmill, which should aid in transferring the corrected gait to walking in natural environments. This work is funded by the Eunice Kennedy Shriver National Institute of Child Health & Human Development, NIH NICHD, award number R21HD066200 and is in collaboration with Amy Bastian at the Kennedy Krieger Institute and Erin Vasudevan at the Moss Rehabilitation Research Institute.

Using Virtual Reality and Robotics Technologies for Vocational Evaluation, Training and Placement

The goal of this project is to improve the effectiveness of vocational rehabilitation services by providing an environment to assess and train individuals with severe disabilities and underserved groups in a safe, adaptable and motivating environment. Using virtual reality, simulators, robotics, and feedback interfaces, this project will allow the vocational rehabilitation population to try various jobs, tasks, virtual environments and assistive technologies prior to entering the actual employment setting. This will aid job evaluators and job coaches assess, train and place persons with various impairments. Using virtual reality, simulators, robotics, and feedback interfaces the proposed project will:

- Develop layered 3D virtual reality simulation and controlled physical environments for several job-related tasks (customer service, hospitality industry, and production environments)

- Assess DVR clients' work abilities with their trainers and find possible work venues using virtual reality and controlled physical environments.

- Train DVR clients on the job of their choice of possible job environments based on the conducted assessments.

- Job placement and follow-up for feedback and adjustments.

The proposed project will simulate job environments such as a commercial kitchen, an industrial warehouse, a retail store or other potential locations that an individual will likely work. Features of the simulator could include layering of colors, ambient noise, physical reach parameters and various user interfaces. The complexity of the simulated job tasks could be varied depending on the limitations of the user to allow for a gradual progression to more complex tasks in order to enhance job placement and training.

Wearable Sensors

In collaboration with Draper Laboratories and the Veterans Administration Hospital, wearable sensors research has been conducted in two different projects. A balance belt project, and a portable motion analysis project.

Balance Belt

The purpose of this study is to develop a wearable Balance Belt to alert patients with abnormal vestibular function for injury and fall prevention. The user will be alerted using four vibrotactiles situated around the belt in case the inertial measurement unit (IMU) senses a good potential of misbalance.

Portable Motion Analysis System

The purpose of this study is to develop a wearable motion analysis system (WMAS) using commercially available inertial measurement units (IMU) working in unison to record and output gait parameters in a clinically relevant way. The WMAS must accurately and reliably output common gait parameters such as gait speed, stride length, torso motion and head rotation velocities which are often indicators of TBI. Validation of the wearable motion analysis system capabilities has been conducted using the Vicon optical based motion analysis system with healthy subjects during various gait trials including increasing and decreasing cadence and speed; and turning. A graphical user interface (GUI) that is clinically relevant will be developed to make this system usable outside of clinical settings.

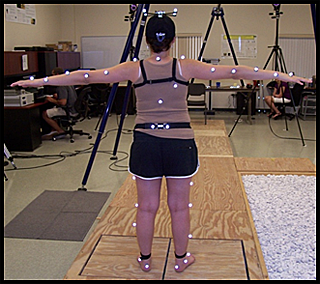

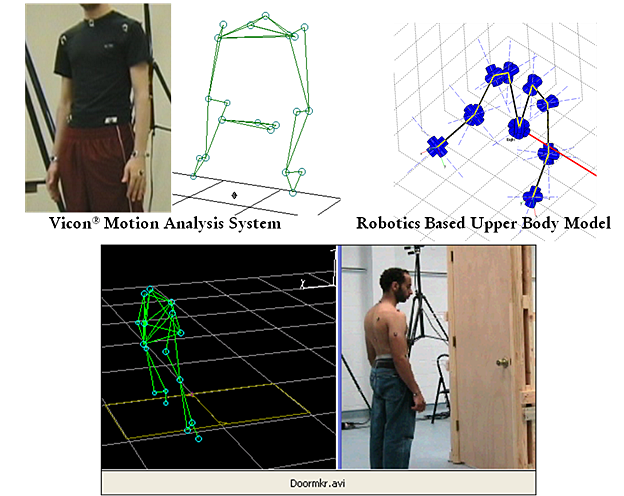

Motion Analysis and Prosthetics Research

Through collaboration with the School of Theatre & Dance and the School of Physical Therapy and Rehabilitation Sciences, biomechanics of human body motion is analyzed for various activities using Vicon motion analysis system, leading to fewer injuries, and better training practices. These activities include upper and lower body motion practices used by athletes and dancers, as well as prosthetics users when performing recreational or daily activities.

Current upper-limb prosthetic devices have powered wrist rotation only, making it difficult to grasp and manipulate objects. The wrist and shoulder compensatory motions of people with transradial prostheses have been investigated in the eight-camera infrared Vicon visual system that collects and analyzes three-dimensional movement data. This information helps clinicians, researchers, and designers develop more effective and practical prosthetic devices. The intact joints of the upper limb compensate for the limitations of the prosthesis using awkward motions. By analyzing the compensatory motions required for activities of daily living due to limitations of the prosthesis we hope to be able to improve the design and selection of prostheses.

Simulation Tool for Prediction of Human-Upper Body Motion

This project is dedicated to the development of a simulation tool consisting of a robotics-based human body model (RHBM) to predict functional motions, and integrated modules for aid in prescription, training, comparative study, and determination of design parameters of upper extremity prostheses. The simulation of human performance of activities of daily living while using various prosthetic devices is optimized by data collected in the motion analysis lab.

The current generation of the RHBM has been developed in MATLAB and is a 25 degree of freedom robotics based kinematic model, with subject specific parameters. The model has been trained and validated using motion analysis data from ten control subjects and data collected from amputee subjects is being integrated as it is collected.

Socket Residual-limb Interface Model

This project concentrates on measuring and predicting motion occurring at the socket residual limb interface. The current model will be a 4 degree of freedom robotics based kinetic model. Movement between the residual limb and prosthetic socket will be collected by a motion capture system (socket rotations and translations) and a new optics based device (relative slip between internal socket face and residual limb skin surface).

Human Upper Body Modeling and Simulation in Space Conditions for Astronaut Training

The goal of this project is to develop a robotics based human upper body model (RHBM), and associated constraints for the prediction and simulation of human motion in confined spaces, and under microgravity conditions to aid astronaut training. A force based component with an adjustable gravity term will also be added to the current kinematic based RHBM to allow for the simulation of external forces at varying levels of gravity: moon gravity; and microgravity. Statistically based probability constraints from motion capture data will also be incorporated to determine if a mixed method of modeling is more accurate and more efficient for studying upper limb movements such as using tools and moving objects. A motion analysis system will be used to collect kinematic data of subjects performing astronaut based activities of daily living in a confined space similar to the International Space Station. Analysis of this data will then be used to derive the model parameters. Functional joint center estimations will be used to find the geometric parameters of the model, and a variety of control methods including using force fields and statistical processes to generate microgravity will be used to determine the control parameters.

Publications

Full Patents Granted

2020

- Tyagi Ramakrishnan, Kyle B. Reed, "Biomimetic transfemoral knee with gear mesh locking mechanism", Patent No. US 10,849,767, Granted: December 15, 2020.

- Catherine Blasse, Daniel Mill, Amber Gatto, "Orthotic Grippers", Patent No. US 10,849,767, Granted: December 1, 2020.

- Christina-Anne K. Lahiff, Kyle B. Reed, Seok H. Kim, Tyagi Ramakrishnan, "Knee orthosis with variable stiffness and damping", Patent No. US 10.736,765, Granted: August 11, 2020.

- Tyagi Ramakrishnan, Kyle B. Reed, "Position/weight-activated knee locking mechanism", Patent No. US 10,702,402, Granted: July 7, 2020.

2019

- Millicent K. Schlafly, Tyagi Ramakrishnan, Kyle B. Reed, "Biomimetic prosthetic device", Patent No. US 10,500,070, Granted: December 10, 2019.

- Redwan Alqasemi, Paul Mitzlaff, Andoni Aguirrezabal, Lei Wu, Karl Rothe, Rajiv Dubey, "Robotic End Effectors For Use With Robotic Manipulators", Patent No. US 10,493,634, Granted: December 3, 2019.

- Jerry West, Redwan Alqasemi, Rajiv Dubey, "Compliant Force/Torque Sensors", Patent No. US 10,486,314, Granted: November 26, 2019.

- Tyagi Ramakrishnan, Kyle B. Reed, "Position/weight-activated knee locking mechanism", Patent No. US 10,369,017, Granted: August 6, 2019.

- Samuel H.L. McAmis, Kyle B. Reed, "Compliant bimanual rehabilitation device and method of use thereof", Patent No. US 10,292,889, Granted: May 21, 2019.

- Millicent K. Schlafly, Tyagi Ramakrishnan, Kyle B. Reed, "Biomimetic prosthetic device", Patent No. US 10,292,840, Granted: May 21, 2019.

- Redwan Alqasemi, Paul Mitzlaff, Andoni Aguirrezabal, Lei Wu, Karl Rothe, Rajiv Dubey, "Robotic End Effectors For Use With Robotic Manipulators", Patent No. US 10,265,862, Granted: April 23, 2019.

2018

- Kyle B. Reed, Ismet Handzic, "Systems and methods for synchronizing the kinematics of uncoupled, dissimilar rotational systems", Patent No. US 9,990,333, Granted: June 5, 2018.

2017

- Matthew Wernke, Samuel Phillips, Derek Lura, Stephanie Carey, Rajiv Dubey, "Prosthesis and orthosis slip detection sensor and method of use", Patent No. US 9,848,822, Granted: December 26, 2017.

- Ismet Handzic, Kyle B. Reed, "Walking assistance devices including a curved tip having a non-constant radius", Patent No. US 9,763,848, Granted: September 19, 2017.

2016

- Ismet Handzic, Kyle B. Reed, "String vibration frequency altering shape", Patent No. US 9,520.110, Granted: December 13, 2016.

- Indika Upashantha Pathirage, Redwan Alqasemi, Rajiv Dubey, Karan Khokar, "Vision-Based Brain-Computer Interface Systems for Performing Activities of Daily Living", Patent No. US 9,389,685, Granted: July 12, 2016.

- Kyle B. Reed, Ismet Handzic, "Gait-altering shoes", Patent No. US 9,295,302, Granted: March 29, 2016.

- Samuel H.L. McAmis, Kyle B. Reed, "Compliant bimanual rehabilitation device and method of use thereof", Patent No. US 9,265.685, Granted: February 23, 2016.

2015

- Kyle B. Reed, John R. Sushko, Craig A. Honeycutt, "Transfemoral prostheses having altered knee locations", Patent No. US 9,050,199, Granted: June 9, 2015.

Published Conference Papers, Journal Papers, and Book Chapters

2021

- L. Bozgeyikli, E. Bozgeyikli, S. Katkoori, A. Raij, R. Alqasemi, "Evaluating the Effects of Visual Fidelity and Magnified View on User Experience in Virtual Reality Games", Journal of Virtual Reality and Broadcasting, 2021.

- Lura DJ, Carey SL, Miro RM, Kahle JT, Highsmith MJ, "Crossover Study of Kinematic, Kinetic, and Metabolic Performance of Active Persons with Transtibial Amputation Using Three Prosthetic Feet", J Prosthet Orthot, 33:2, (2021), pp.110-117.

- F. Rasouli, S.H. Kim, and K.B. Reed, "Superposition principle applies to human walking with two simultaneous interventions", Scientific Reports, Vol. 11, Article number: 7465, 2021.

- F. Rasouli and K.B. Reed, "Identical Limb Dynamics for Unilateral Impairments through Biomechanical Equivalence", Symmetry, Volume 12, Number 4, 705, 2021.

- U. Trivedi, V. Paley, R. Alqasemi, R. Dubey, "Robot Learning from Human Demonstration of Activities of Daily Living (ADL) Tasks", Proceedings of the International Mechanical Engineering Congress & Exposition (IMECE 2021), November 1-5, 2021, Virtual Conference.

- T. Tan, R. Alqasemi, R. Dubey, S. Sarkar, "Formulation and Validation of an Intuitive Quality Measure for Antipodal Grasp Pose Evaluation", In Review for the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS, 2021), September 27 – October 1, 2021, Prague, Czech Republic.

- T. Tan, R. Alqasemi, R. Dubey, S. Sarkar, "Formulation and Validation of an Intuitive Quality Measure for Antipodal Grasp Pose Evaluation", IEEE Robotics and Automation Letters (RA-L 2021), Vol. 6, Issue 4, pp. 6907-9614, October, 2021.

- L. Habhab, U. Trivedi, R. Alqasemi, R. Dubey, "Autonomous Wheelchair Indoor-Outdoor Navigation System through Accessible Routes", The 14th PErvasive Technologies Related to Assistive Environments Conference (PETRA, 2021), June 29 - July 2, 2021, Corfu, Greece.

- Kahle J, Miro RM, Ho LT, Porter M, Lura DJ, Carey SL, Lunseth P, Highsmith J Highsmith MJ, "Effect of Transfemoral Prosthetic Socket Interface Design on Fait, Balance, Mobility, and Preference - A Randomized Clinical Trial", Prosthetics and Orthotics International, March 2021.

- Cianfarani Meneghel A, Penick L, Reid M, Carey SL, "Biomechanics for Enhancement of Exercise Stability during Perturbations in Human Exploration Missions", NASA Human Research Program Investigators Workshop, (HRP 2021), Feb. 2021, Virtual Conference.

- Austin R, Patel N, Carey SL, "Biomechanical Analysis for Enhancement of Lunar Surface Operations", NASA Human Research Program Investigators Workshop, (HRP 2021), Feb. 2021, Virtual Conference.

- Patel N, Buth Z, Lauer C, Carey SL, "Measurement of Human-Suit Interaction during Extravehicular Activity to Reduce Injury", NASA Human Research Program Investigators Workshop, (HRP 2021), Feb. 2021, Virtual Conference.

2020

- R. Shaikh, P. Mattioli, K. Corbett, L. Bozgeyikli, E. Bozgeyikli, R. Alqasemi, "Improved User Experience in Virtual Reality - The Portable VR4VR: A Virtual Reality System for Vocational Rehabilitation", Book Chapter in Virtual Reality: recent Advancements, Applications and Challenges, River Publishers, 2020.

- Lura DJ, Carey SL, Miro RM, Kahle JT, Highsmith MJ, "Crossover Study of Kinematic, Kinetic, and Metabolic Performance of Active Persons with Transtibial Amputation Using Three Prosthetic Feet", J Prosthet Orthot, 2020, doi: 10.1097/JPO.0000000000000290.

- Morris ML, Nunez A, Scott A, Carey SL, "Analyzing the Use of the Fifth Position in Dance Training", In: Lee SH., Morris M., Nicosia S. (eds) Perspectives in Performing Arts Medicine Practice, Springer, 2020, doi: 10.1007/978-3-030-37480-8_10.

- Lee SH, Sanchez-Ramos J, Murtagh R, Vu T, Hardwick D, Carey SL, "A Case Report: Using Multimodalities to Examine a Professional Pianist with Focal Dystonia", In: Lee SH., Morris M., Nicosia S. (eds) Perspectives in Performing Arts Medicine Practice, Springer, 2020, doi: 10.1007/978-3-030-37480-8_11.

- Lopez E, Lee SH, Bahr R, Carey SL, Mott B, Fults A, Lazinski M, Kim ES, "Breathing Techniques in Collegiate Vocalists: The Effects of the Mind-Body Integrated Exercise Program on Singers’ Posture, Tension, Efficacy, and Respiratory Function", In: Lee SH., Morris M., Nicosia S. (eds) Perspectives in Performing Arts Medicine Practice, Springer, 2020, doi: 10.1007/978-3-030-37480-8_8.

- Kahle J, Miro RM, Ho LT, Porter M, Lura DJ, Carey SL, Lunseth P, Highsmith J Highsmith MJ, "The effect of the transfemoral prosthetic socket interface designs on skeletal motion and socket comfort: A randomized clinical trial", Prosthetics and Orthotics International, 44:3, pp.145-154, 2020, doi: 10.1177/0309364620913459.

- F. Rasouli and K. B. Reed, "Walking assistance using crutches: A state of the art review", Journal of Biomechanics, vol. 98, p. 109489, 2020.

- M. Schlafly and K. B. Reed, "Novel passive ankle-foot prosthesis mimics able-bodied ankle angles and ground reaction forces", Clinical Biomechanics, vol. 72, pp. 202-210, 2020.

- M. Schlafly and K. B. Reed, "Roll-over shape-based design of novel biomimetic ankle-foot prosthesis", JPO: Journal of Prosthetics and Orthotics, 2020.

- D. Huizenga, L. Rashford, B. Darcy, E. Lundin, R. Medas, S. T. Shultz, E. DuBose, and K. B. Reed, "Wearable gait device for stroke gait rehabilitation at home", Topics in Stroke Rehabilitation, 2020.

- Stephenson JB, Haladay DE, Carey SL, Beckstead JW, Robertson D, "A Combination of Core Exercise and Balance-Based Torso Weighting for Women with Multiple Sclerosis", International Journal of MS Care, 22:S2, (2020), pp. 73-74.

- L. Wu, R. Alqasemi, R. Dubey, "Development of Smartphone-Based Human-Robot Interfaces for Individuals with Disabilities", Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS, 2020), October 25 - 29, 2020, Las Vegas, NV, USA.

- Gatto A, Sundarrao S, Carey SL, "Survey Assessing Assistive Technologies and Activities of Daily Living", Rehabilitation Engineering and Assistive Technology Society of North America (RESNA 2020), Sept. 2020, Virtual Conference.

- Fults A, Carey SL, "Elbow Biomechanics of Independent Wheelchair Transfer: A Case Study", Rehabilitation Engineering and Assistive Technology Society of North America (RESNA 2020), Sept. 2020, Virtual Conference.

- Blocker AL, Lostroscio KL, Carey SL, "Simulated Exercise Device Vibration Isolation System for Spaceflight Applications", American Society of Biomechanics Annual Meeting (ASB 2020), June, 2020, Virtual Conference.

- Fults A, Sundarrao S, Carey SL, "Peak Elbow Angles in Independent Wheelchair Transfer: A Preliminary Study", American Society of Biomechanics Annual Meeting (ASB 2020), June, 2020, Virtual Conference.

- L. Wu, R. Alqasemi, R. Dubey, "Development of Smartphone-Based Human-Robot Interfaces for Individuals with Disabilities", IEEE Robotics and Automation Letters (RA-L 2020), June, 2020.

- R. Mounir, R. Alqasemi, R. Dubey, "BCI-Controlled Hands-Free Wheelchair Navigation with Obstacle Avoidance", arXiv preprint arXiv, 2005.04209, May, 2020.

- Blocker AL, Alex SC, Lostroscio KH and Carey SL, "Passive Vibration Isolation System Simulator using an Active Motion Sensing Base", 2020 NASA Human Research Program Investigators Workshop, (HRP 2020), Jan. 2020, Galveston, TX, USA.

2019

- Lee SH, Carey SL, Lazinski M, Kim ES, "An Integrative Intervention Program for College Musicians and Kinematics in Cello Playing", European Journal of Integrative Medicine, 25, pp.34-40, 2019.

- E. Bozgeyikli, A. Raij, S. Katkoori, and R. Dubey, "Locomotion in virtual reality for room scale tracked areas", International Journal of HumanComputer Studies, 122, 38-49. 2019.

- M. Schlafly, Y. Yilmaz, and K. B. Reed, "Feature selection in gait classification of leg length and distal mass", Informatics in Medicine Unlocked, Volume 15, p. 100163, 2019.

- B. Rigsby and K. B. Reed, "Accuracy of dynamic force compensation varies with direction and speed", IEEE Transactions on Haptics, Volume 12, Number 4, pp. 658-664, 2019.

- T. Ramakrishnan, S. H. Kim, and K. B. Reed, "Human Gait Analysis Metric for Gait Retraining", Applied Bionics and Biomechanics, Article ID 1286864, 2019.

- S. Kim, D. Huizenga, I. Handzic, R. Ditwiler, M. Lazinski, T. Ramakrishnan, A. Bozeman, D. Rose, K. B. Reed, "Relearning functional and symmetric walking after stroke using a wearable device: a feasibility study", Journal of NeuroEngineering and Rehabilitation, Volume 16, 2019.

- D. Menychtas, S. Carey, R. Alqasemi, R. Dubey, "Upper Limb Motion Simulation Algorithm for Prosthesis Prescription and Training", Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS, 2019), November 4 - 8, 2019, Macau, China.

- Gatto A, Sundarrao S, Carey SL, "The Design and Development of a Wrist Hand Orthosis", Rehabilitation Engineering and Assistive Technology Society of North America (RESNA 2019), June 24-28, 2019, Toronto, Canada.

- U. Trivedi, R. Alqasemi, R. Dubey, "Wearable Musical Haptic Sleeves for People with Hearing Impairment", Proceedings of the 12th International Conference on Pervasive Technologies Related to Assistive Environments (PETRA 2019), June 5-7, 2019, Rhodes, Greece.

- Lostroscio KH, Blocker A, Quiocho L, Carey SL, "Exercise of a Vibration Isolation System Simulator", arXiv 2019 NASA Human Research Program Investigators Workshop, (HRP 2019), Jan. 2019, Galveston, TX, USA.

2018

- E. Bozgeyikli, A. Raij, S. Katkoori, and R. Dubey, "Locomotion in Virtual Reality for Room Scale Tracked Areas", International Journal of Human Computer Studies, Elsevier, 2018.

- L. Bozgeyikli, A. Raij, S. Katkoori, R. Alqasemi, "Evaluating the Effects of Visual Fidelity and Magnified View on User Experience in Virtual Reality Games", The Journal of Virtual Reality and Broadcasting, 2018.

- E. Bozgeyikli, L. Bozgeyikli, R. Alqasemi, A. Raij, S. Katkoori, R. Dubey, "Virtual Reality Interaction Techniques for Individuals with Autism Spectrum Disorder", Springer International Publishing AG. Part of Springer Nature, Universal Access in Human-Computer Interaction - Methods, Technologies, and Users, LNCS 10908, pp. 58-77, 2018.

- L. Bozgeyikli, E. Bozgeyikli, A. Aguirrezabal, R. Alqasemi, A. Raij, S. Sundarrao, R. Dubey, "Using Immersive Virtual Reality Serious Games for Vocational Rehabilitation of Individuals with Physical Disabilities", Springer International Publishing AG. Part of Springer Nature, Universal Access in Human-Computer Interaction - Methods, Technologies, and Users, LNCS 10908, pp. 48-57, 2018.

- M. Walker and K. B. Reed, "Tactile Morse Code Using Locational Stimulus Identification", IEEE Transactions on Haptics, Volume 11, Number 1, pp. 151-155, 2018.

- T. Ramakrishnan, C. Lahiff, K. B. Reed, "Comparing Gait with Multiple Physical Asymmetries Using Consolidated Metrics", Frontiers in Neurorobotics, Volume 13, Art. 2, 2018.

- L. Bozgeyikli, E. Bozgeyikli, S. Katkoori, A. Raij, R. Alqasemi, "Effects of Virtual Reality Properties on User Experience of Individuals with Autism", ACM Transactions on Accessible Computing, 11(4):1-27, November, 2018.

- S.L. Carey and K.H. Lostroscio, “Human-Vibration Isolation System (VIS) Interactions during Squat and Row Exercises,” American Society for Gravitational and Space Research (ASGSR), Nov. 2018, Washington, DC, USA.

- K.H. Lostroscio, S.L. Carey, L.J. Quiocho, “Measurement of Exercise Ground Reaction Forces under Appropriate Conditions for the Design of Vibration Isolation Systems,” 69th International Astronautical Congress (IAC), Oct. 2018, Bremen, Germany.

- A. Gatto A, S. Sundarrao, S.L. Carey, “Design and Development of a Wrist-Hand Orthosis for Individuals with a Spinal Cord Injury,” International Conference on Intelligent Robots and Systems (IROS), October 1-5, 2018, Madrid, Spain.

- R. Mounir, R. Alqasemi and R. Dubey, “BCI-Controlled Hands-Free Wheelchair with Obstacle Avoidance,” IROS workshop on Haptic-enabled shared control of robotic systems: a compromise between teleoperation and autonomy - in the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018), October 1-5, 2018, Madrid, Spain.

- D. Menychtas, S. Carey, R. Alqasemi and R. Dubey, “Upper Arm Activities of Daily Living Simulation Algorithm for Prosthesis Prescription and Training,” IROS workshop on Wearable Robotics for Motion Assistance and Rehabilitation - in the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018), October 1-5, 2018, Madrid, Spain.

- E. Bozgeyikli, L. Bozgeyikli, R. Alqasemi, A. Raij, S. Katkoori, R. Dubey, “Virtual Reality Interaction Techniques for Individuals with Autism Spectrum Disorder,” Proceedings of The 20th International Conference on Human-Computer Interaction (HCI International), 15-20 July, 2018, Las Vegas, NV, USA.

- L. Bozgeyikli, E. Bozgeyikli, A. Aguirrezabal, R. Alqasemi, A. Raij, S. Sundarrao, R. Dubey, “Using Immersive Virtual Reality Serious Games for Vocational Rehabilitation of Individuals with Physical Disabilities,” Proceedings of The 20th International Conference on Human-Computer Interaction (HCI International), 15-20 July, 2018, Las Vegas, NV, USA.

- S.L. Carey, A. Aguirrezabal, S. Sundarrao, R. Alqasemi, R.V. Dubey, “Enhanced Control to Improve Navigation and Manipulation of Power Wheelchairs,” 40th Annual International Conference of the IEEE EMBS, July, 2018, Honolulu, HI, USA.

- N. Pernalete, A. Raheja, M. Segura, D. Menychtas, T. Wieczorak, S.L. Carey, “Eye-Hand Coordination Assessment Metrics Using a Multi-Platform Haptic System with Eye-Tracking and Motion Capture Feedback,” 40th Annual International Conference of the IEEE EMBS, July, 2018, Honolulu, HI, USA.

- R. Shaikh, P. Mattioli, K. Corbett, L. Bozgeyikli, E. Bozgeyikli, R. Alqasemi, “The Portable VR4VR: A Virtual Reality System for Vocational Rehabilitation,” Proceedings of The Workshop on Robotics in Virtual Reality at The IEEE International Conference on Robotics and Automation (ICRA 2018), May 21 - 25, 2018, Brisbane, Australia.

- L. Bozgeyikli, E. Bozgeyikli, R. Alqasemi, and R. Dubey, “Robotics in Virtual Reality Workshop,” Proceedings of the 2018 IEEE International Conference on Robotics & Automation (ICRA 2018), May 21 - 25, 2018, Brisbane, Australia.

- M. Mashali, L. Wu, R. Alqasemi, and R. Dubey, “Controlling a Non-Holonomic Mobile Manipulator in a Constrained Floor Space,” Proceedings of the 2018 IEEE International Conference on Robotics & Automation (ICRA 2018), May 21 - 25, 2018, Brisbane, Australia.

- A. Aguirrezabal, D. Ashley, R. Alqasemi, S. Sundarrao, and R. Dubey , “Enhanced Control to improve Navigation and Manipulation of Power Wheelchairs,” International Society for Gerontechnology's 11th World Conference of Gerontechnology , May 7-11, 2018, St. Petersburg, FL, USA.

- A. Gatto A, S.L. Carey, “Design and Development of a Wrist-Hand Orthosis for Individuals with a Spinal Cord Injury,” International Society for Gerontechnology's 11th World Conference of Gerontechnology , May 7-11, 2018, St. Petersburg, FL, USA.

- A. Aguirrezabal, D. Ashley, R. Alqasemi, S. Sundarrao, and R. Dubey , “Enhanced Control to improve Navigation and Manipulation of Power Wheelchairs,” Gerontechnology, 17(s):91-91, 05/2018.

- B. Mott, S.L. Carey, “Evaluating the Effectiveness of Upper Limb Orthoses in Adults with Rheumatoid Arthritis: A Systematic Review,” The Academy of Orthotists and Prosthetists 41st Annual Meeting and Scientific Symposium (AAOP 2018) , Feb. 2018, New Orleans, Louisiana, USA.

- D.J. Lura, S.L.Carey, RM Miro, J.T. Kahle, M.J. Highsmith, “Gait Performance of Three Prosthetic Feet for High-Performance Transtibial Amputees,” The Academy of Orthotists and Prosthetists 41st Annual Meeting and Scientific Symposium (AAOP 2018), Feb. 2018, New Orleans, Louisiana, USA.

- S.L. Carey, A.D. Knight, M.J. Highsmith, “Comparison of Voluntary Open and Closing Terminal Devices using the Box and Blocks Test,” The Academy of Orthotists and Prosthetists 41st Annual Meeting and Scientific Symposium (AAOP 2018), Feb. 2018, New Orleans, Louisiana, USA.

2017

- Hardwick D, Carey SL, Lazinski M, Lee SH, "Measuring the Performer and Performance, In Scholarly Research for Musicians", By Sang-Hie Lee, Routledge, 2017, ISBN-13: 978-1138208889.

- E. Bozgeyikli, L. Bozgeyikli, A. Raij, S. Katkoori, R. Alqasemi, and R. Dubey, “Evaluation of Virtual Reality Interaction Techniques for Individuals with Autism Spectrum Disorder,” Journal of Multimodal Technologies and Interaction, Virtual Reality and Games, 2017.

- Carey SL, Reed KB, Martori A, Ramakrishnan T, Dubey R, "Evaluating the Gait of Lower Limb Prosthesis Users", Wearable Robotics: Challenges and Trends. Biosystems & Biorobotics, 16 (2017), pp. 219-224.

- Carey SL, Stevens, PM, Highsmith MJ, "Differences in Myoelectric and Body-Powered Upper-Limb Prostheses: Systematic Literature Update 2013-2016", J Prosthet Orthot, 29:4S (2017), pp.17-20.

- Carey SL, Lura DJ, Highsmith MJ, "Differences in Myoelectric and Body-Powered Upper Limb Prostheses: A Systematic Literature Review", J Prosthet Orthot, 29:4S (2017), pp.4-16.

- Lura, DJ, Kahle J, Wenke M, Miro R, Carey SL, Highsmith MJ, "Crossover Study of Amputee Stair Ascent and Descent Biomechanics Using Genium and C-Leg Prostheses with Comparison to Non-Amputee Control Gait and Posture ", Gait and Posture, 58, (2017), pp. 103-107.

- A. Manasrah, N. Crane, R. Guldiken, and K. B. Reed, "Perceived Cooling Using Asymetrically-Applied Hot and Cold Stimuli", IEEE Transactions on Haptics, Volume 10, Number 1, pp. 75-83, 2017.

- H. Muratagic, T. Ramakrishnan, and K. B. Reed, "Combined effects of leg length discrepancy and the addition of distal mass on gait asymmetry", Gait & Posture, Volume 58, pp. 487-492, 2017.

- U. Trivedi, J. McDonnough, M. Shamsi, A. Ochoa, A. Braynen, C. Krukauskas, R. Alqasemi, R. Dubey, “A Wearable Device for Assisting Persons with Vision Impairment,” Proceedings of the International Mechanical Engineering Congress & Exposition (IMECE 2017), November 3-9, 2017, Tampa, FL, USA.

- R. Mounir, R. Alqasemi, R. Dubey, “Speech Assistance for Persons with Speech Impediments Using Artificial Neural Networks,” Proceedings of the International Mechanical Engineering Congress & Exposition (IMECE 2017), November 3-9, 2017, Tampa, FL, USA.

- S. Gong, R. Alqasemi, R. Dubey, “Gradient Optimization of Inverse Dynamics for Robotic Manipulator Motion Planning Using Combined Optimal Control,” Proceedings of the International Mechanical Engineering Congress & Exposition (IMECE 2017), November 3-9, 2017, Tampa, FL, USA.

- C. Lahiff, M. Schlafly, K. B. Reed, “Effects on Balance When Interfering with Proprioception at the Knee,” Proceedings of the International Mechanical Engineering Congress & Exposition (IMECE 2017), November 3-9, 2017, Tampa, FL, USA.

- F. Rasouli, A. Torres, K. B. Reed, “Assistive Force Redirection of Crutch Gait Produced by the Kinetic Crutch Tip,” Proceedings of the International Mechanical Engineering Congress & Exposition (IMECE 2017), November 3-9, 2017, Tampa, FL, USA.

- M. Schlafly, T. Ramakrishnan, and K. B. Reed, “3D Printed Passive Compliant and Articulating Prosthetic Ankle Foot,” Proceedings of the International Mechanical Engineering Congress & Exposition (IMECE 2017), November 3-9, 2017, Tampa, FL, USA.

- Carey SL, “Development of a Rehabilitation Engineering Course,” British Society of Rehabilitation Medicine (BSRM), Rehab Week, London, UK, July 2017.

- F. Rasouli, D. Huizenga, T. Hess, I. Handzic, and K. B. Reed, “Quantifying the Benefit of the Kinetic Crutch Tip,” Proc. of the 15th Intl. Conf. on Rehabilitation Robotics (ICORR), July 17-20, 2017, London, UK.

- T. Ramakrishnan, M. Schlafly and K. B. Reed, “Evaluation of 3D Printed Anatomically Scalable Transfemoral Prosthetic Knee,” Proc. of the 15th Intl. Conf. on Rehabilitation Robotics (ICORR), July 17-20, 2017, London, UK.

- K. Ashley, R. Alqasemi, R. Dubey, “Robotic Assistance for Performing Vocational Rehabilitation Activities Using BaxBot,” Proceedings of the 15th IEEE/RAS-EMBS International Conference on Rehabilitation Robotics (ICORR 2017), July 17-20, 2017, London, UK.

- D. Ashley, K. Ashley, R. Alqasemi, R. Dubey, “Semi-Autonomous Mobility Assistance for Power Wheelchair Users Navigating Crowded Environments,” Proceedings of the 15th IEEE/RAS-EMBS International Conference on Rehabilitation Robotics (ICORR 2017), July 17-20, 2017, London, UK.

- L. Bozgeyikli, A. Raij, S. Katkoori, and R. Alqasemi, “Effects of Instruction Methods on User Experience in Virtual Reality Serious Games,” Proceedings of International Conference on Human-Computer Interaction, July 9-14 2017, Vancouver, Canada.

- Knight A, Carey SL, Dubey R, “Transradial Prosthesis Performance Enhanced with the Use of a Computer Assisted Rehabilitation Environment,” Proceedings of the 10th International Conference on Pervasive Technologies Related to Assistive Environments (PETRA), Rhodes, Greece, June, 2017.

- Menychtas D, Carey SL, “Comparing the Task Joint Motion Between Able-bodied and Transradial Prosthesis Users During Activities of Daily Living,” 16th World Congress of the International Society for Prosthetics and Orthotics (ISPO), Cape town, South Africa, May 2017.

- Knight A, Carey SL, Dubey R, “Upper Extremity Prosthetic Training and Rehabilitation with the Use of Virtual Reality,” 16th World Congress of the International Society for Prosthetics and Orthotics (ISPO), Cape town, South Africa, May 2017.

- L. Wu, R. Alqasemi, and R. Dubey, “Kinematic Modeling and Control Algorithm for Non-holonomic Mobile Manipulator and Testing on WMRA system,” Proceedings of the Florida Conference on Recent Advances in Robotics (FCRAR), May 11-12, 2017, Boca Raton, FL, USA.

- E. Bozgeyikli, L. Bozgeyikli, A. Aguirrezabal, R. Alqasemi, S. Sundarrao, and R. Dubey, “Vocational Rehabilitation of Individuals with Disabilities Using Virtual Reality,” Proceedings of the Florida Conference on Recent Advances in Robotics (FCRAR), May 11-12, 2017, Boca Raton, FL, USA.

- Knight A, Carey SL, Dubey R, “Upper Extremity Prosthetic Training with the Used of a Computer Assisted Rehabilitation Environment (CAREN)", The Academy of Orthotists and Prosthetists 43rd Annual Meeting and Scientific Symposium (AAOP 2017), Chicago, IL, March, 2017.

- L. Bozgeyikli, A. Raij, S. Katkoori and R. Alqasemi, “A Survey on Virtual Reality for Training Individuals with Autism Spectrum Disorder: Design Considerations,” IEEE Transactions on Learning Technologies (TLT), Vol. 10, No. 1, Jan-Mar, 2017.

- L. Bozgeyikli, E. Bozgeyikli, A. Raij, R. Alqasemi, S. Katkoori, and R. Dubey, “Vocational Rehabilitation of Individuals with Autism Spectrum Disorder with Virtual Reality,” ACM Transactions on Accessible Computing (TACCESS), Vol. 49, No. 4, January, 2017.

- R. Alqasemi, “Virtual Reality For Vocational Assessment and Rehabilitation of Individuals With Disabilities (VR4VR),” 24th Annual Conference on Autism and Related Disabilities (CARD), January 20-22, 2017, Orlando, FL, USA.

2016

- E. Bozgeyikli, L. Bozgeyikli, A. Raij, S. Katkoori, R Alqasemi, and R. Dubey, “Virtual Reality Interaction Techniques for Individuals with Autism Spectrum Disorder: Design Considerations and Preliminary Results,” HCI International Conference, 2016 Human-Computer Interaction. Interaction Platforms and Techniques Book Chapter, Volume 9732 pp 127- 137, Springer, 2016.

- Highsmith MJ, Lura DJ, Carey SL, Mengelkoch LJ, Kim SH, Quillen WH, Kahle JT, Miro RM, “Correlations between residual limb length and joint moments during sitting and standing movements in transfemoral amputees”, Prosthet Orthot Int (POI), 40:4 (2016), pp. 522-27. PMID: 25628379.

- I. Handzic, H. Muratagic, and K. B. Reed, “Two-Dimensional Kinetic Shape Dynamics: Verification and Application”, Journal of Nonlinear Dynamics, Volume 2016, ID8124015, 2016.

- Highsmith MJ, Kahle, JT, Miro R, Cress EM, Lura DJ, Quillen WS, Carey SL, Dubey RV, Mengelkoch L, “Functional Performance Differences Between the Genium and C-Leg Prosthetic Knees and Intact Knees”, Journal of Rehabilitation Research & Development (JRRD), 53:6 (2016), pp. 753-66.

- Highsmith MJ, Kahle JT, Miro RM, Lura DJ, Carey SL, Wernke MM, Kim SH, Quillen WS, “Differences in Military Obstacle Course Performance Between Three Energy-Storing and Shock Adapting Prosthetic Feet in High-Functioning Transtibial Amputees: Double-Blind, Randomized Control Trial”, Mil Med, 181:S4 (2016), pp. 45-54.

- Pernalete N, Raheja A, Carey SL, “Haptic and Visual Feedback Technology for Upper-Limb Disability Assessment,” Proceedings of the ASME 2016 International Mechanical Engineering Conference & Exposition (IMECE2016), Phoenix, AZ, Nov. 2016.

- L. Bozgeyikli, A. Raij, S. Katkoori, and R. Alqasemi, “Effects of Visual Fidelity and Magnified View on Task Performance in Virtual Reality Games,” Proceedings of EuroVR Conference, European Association for Virtual Reality and Augmented Reality, November 22-24, 2016, Athens, Greece.

- Menychtas, D, Carey SL, Dubey R, Lura, D (2016), “A Robotic Human Body Model with Joint Limits for Simulation of Upper Limb Prosthesis Users,” Proceedings of the 2002 EEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2016), Daejeon, Korea. Oct., 2016.

- Carey SL, Reed KB, Martori A, Ramakrishnan T, Dubey R, “Evaluating the Gait of Lower Limb Prosthesis Users,” The International Symposium on Wearable Robotics (WeRob), Segovia, Spain, October, 2016.

- E. Bozgeyikli, A. Raij, S. Katkoori, and R. Dubey, “Point & Teleport: A Noteworthy Locomotion Technique for Virtual Reality,” ACM SIGCHI Annual Symposium on Computer-Human Interaction in Play (CHI PLAY), October 16-19, 2016, Austin, TX.

- L. Bozgeyikli, A. Raij, S. Katkoori, and R. Alqasemi, “Effects of Environmental Clutter and Motion on User Performance in Virtual Reality Game,” Proceedings of ACM CHIPlay Conference Workshop on Fictional Game Elements: Critical Perspectives on Gamification Design, October 16-19, 2016, Austin, TX, USA.

- E. Bozgeyikli, A. Raij, S. Katkoori, and R. Dubey, “Locomotion in Virtual Reality for Individuals with Autism Spectrum Disorder,” ACM Spatial User Interaction Conference (SUI), October 15-16, 2016, Tokyo, Japan.

- Highsmith MJ, Kahle JT, Wernke MM, Carey SL, Miro RM, Lura DJ, Sutton BS, “Effects of the Genium Knee System on Functional Level, Stair Ampulation, Perceptive and Economic Outcomes in Transfemoral Amputees”, Technol Innov, 18:2-3,(2016) pp.139-50.

- Highsmith MJ, Kahle JT, Miro RM, Cress ME, Quillen WS, Carey SL, Dubey RV, Mengelkoch LJ, “Concurrent Validity of the Continuous Scale-Physical Functional Performance-10 (CS-PFP-10) Test in Transfemoral Amputees”, Technol Innov, 18:2-3 (2016), pp.185-91.

- T. Ramakrishnan, H. Muratagic, and K. B. Reed, “Combined gait asymmetry metric,” 38th in Conf. Proc. IEEE Eng. Med. Biol. Soc. (EMBC), August 17-20, 2016, Orlando, FL, USA.

- S. H. Kim, C. Lahiff, T. Ramakrishnan and K. B. Reed, “Knee orthosis with variable stiffness and damping that simulates hemiparetic gait,” 38th in Conf. Proc. IEEE Eng. Med. Biol. Soc. (EMBC), August 17-20, 2016, Orlando, FL, USA.

- B. Rigsby and K. B. Reed, “Effect of Weight and Number of Fingers on Bimanual Force Recreation,” 38th in Conf. Proc. IEEE Eng. Med. Biol. Soc. (EMBC), August 17-20, 2016, Orlando, FL, USA.

- S. H. Kim, I. Handzic, D. Huizenga, R. Edgeworth, M. Lazinski, T. Ramakrishnan, and K. B. Reed, “Initial Results of the Gait Enhancing Mobile Shoe on Individuals with Stroke,” 38th in Conf. Proc. IEEE Eng. Med. Biol. Soc. (EMBC), August 17-20, 2016, Orlando, FL, USA.

- B. Rigsby and K. B. Reed, “Assessing the Effect of Experience on Bimanual Force Recreation,” 38th in Conf. Proc. IEEE Eng. Med. Biol. Soc. (EMBC), August 17-20, 2016, Orlando, FL, USA.

- K. A. Hart, J. Chapin, and K. B. Reed, “Haptics Morse Code Communication for Deaf and Blind Individuals,” 38th in Conf. Proc. IEEE Eng. Med. Biol. Soc. (EMBC), August 17-20, 2016, Orlando, FL, USA.

- Wieczorek T, Menychtas D, Pernalete N, Carey SL, “Motion Capture Feedback for Upper-Limb Disability Task Variation Assessment,” 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Orlando, FL , August, 2016.

- Carey SL, Menychtas D, Sullins T, Dubey RV, “Development of a Simulation Tool for Upper Extremity Prostheses,” 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Orlando, FL , August, 2016.

- Menychtas D, Sullins T, Rigsby B, Carey SL, Reed KB, “ Assessing the Role of Preknowledge in Force Compensation During a Tracking Task,” 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Orlando, FL , August, 2016.

- K. Ashley, R. Alqasemi, R. Dubey, “Interactive Robotic Assistance Using Baxter,” Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC 2016), August 17-20, 2016, Orlando, FL, USA.

- E. Bozgeyikli, L. Bozgeyikli, A. Aguirrezabal, R. Alqasemi, and R. Dubey, “VR4VR: An Immersive Virtual Reality Vocational Rehabilitation System for Individuals with Severe Disabilities,” IEEE Engineering in Medicine and Biology Society Conference, August 16-20, 2016, Orlando, FL, USA.

- M. Mashali, R. Alqasemi, R. Dubey, “Novel Dual-Trajectory Tracking Using Spherical Control Variables,” Proceedings of the 2016 World Automation Congress, July 31 – August 4, 2016, Rio Mar, Puerto Rico.

- E. Bozgeyikli, L. Bozgeyikli, A. Raij, S. Katkoori, R. Alqasemi, and R. Dubey, “Virtual Reality Interaction Techniques for Individuals with Autism Spectrum Disorder: Design Considerations and Preliminary Results,” Proceedings of the 18th International Conference on Human-Computer Interaction (HCI 2016), July 17-22, 2016, Toronto, Canada.

- L. Bozgeyikli, E. Bozgeyikli, A. Aguirrezabal, R. Alqasemi, S. Sundarrao, and R. Dubey, “Immersive Virtual Reality for Vocational Rehabilitation of Individuals with Disabilities,” Proceedings of the 2016 Rehabilitation Engineering and Assistive Technology Society of North America (RESNA 2016), July 10-14, 2016, Arlington, Virginia, USA.

- Lee SH, Carey SL, Lazinski M, “Fit to Play: Mind-body Integration Program for Collegiate Musicians,” Medical Problems of Performing Artists, Annual Symposium of the Performing Arts Medicine Association (PAMA), Snowmass, CO, July 2016.

- Knight A, Carey SL, Dubey R, “An Interim Analysis of the Use of Virtual Reality to Enhance Upper Limb Prosthetic Training and Rehabilitation,” Proceedings of the 9th International Conference on Pervasive Technologies Related to Assistive Environments (PETRA), Corfu, Greece, June, 2016.

- Menychtas D, Carey SL, Dubey R, “Simulation for Upper Limb Prosthesis Users,” 2016 BMES/FDA Frontiers in Medical Devices Conference, College Park, MD, May 2016.

- Martori AL, Carey SL, “Comparison of Able-bodied and Bilateral Amputee Kinematics at Various Elevations,” 2016 BMES/FDA Frontiers in Medical Devices Conference, College Park, MD, May 2016.

- A. Leon, J. Marzano, A. Oyenusi, G. Sanchez, K. Ashley, R. Alqasemi, “Autonomous Intelligent Grasping in Assistive Robotics Applications,” Proceedings of the 29'th Florida Conference on Recent Advances in Robotics and Robot Showcase (FCRAR 2016), May 12-13, 2016, Miami, FL, USA.

- Carey SL, “Assessment Tools for Benchmarking Lower Limb Prosthetic Gait,” Benchmarking Bipedal Locomotion Website, Cajal Institute, Spanish National Research Council, 03/2016

- L. Bozgeyikli, E. Bozgeyikli, A. Raij, R. Alqasemi, S. Katkoori, and R. Dubey, ”Virtual Reality Vocational Rehabilitation of Individuals with Autism Spectrum Disorder,” Proceedings of the ACM Transactions on Accessible Computing (TACCESS), March, 2016.

- A. Knight, “An Interim Analysis of the Use of Virtual Reality to Enhance Upper-Limb Prosthetic Training and Rehabilitation", The Academy of Orthotists and Prosthetists 41st Annual Meeting and Scientific Symposium (AAOP 2016), Orlando, FL, March 2016.

- L. Bozgeyikli, E. Bozgeyikli, A. Raij, R. Alqasemi, S. Katkoori, and R. Dubey, “Vocational Training with Immersive Virtual Reality for Individuals with Autism: Towards Better Design Practices,” Proceedings of the IEEE Virtual Reality Conference (VR 2016), March 19-23, 2016, Greenville, South Carolina, USA.

2015

- Lura DJ, Wernke MM, Carey SL, Kahle JT, Miro RM, Highsmith MJ, "Differences in Knee Flexion between the Genium and C-Leg Microprocessor Knees While Walking on Level Ground and Ramps," Clinical Biomechanics, 30:2 (2015), pp175-81.

- I. Handzic and K. B. Reed, "Perception of Gait Patterns that Deviate from Normal and Symmetric Biped Locomotion," Frontiers in Psychology, Volume 6, 2015.

- T. Ramakrishnan, C. Lahiff, A. K. Marroquin, and K. B. Reed, "Position and Weight Activated Passive Prosthetic Knee Mechanism," Proc. of the ASME 2015 International Mechanical Engineering Congress and Exposition (IMECE 2015), November 13-19, 2015, Houston, USA.

- Carey SL, Lopez A, "Human Balance: Study and Evaluation by Motion Capture, EOG and EMG Biopotentials," Biomedical Engineering Society Annual Meeting (BMES 2015), Tampa, FL October 7-10, 2015.

- Lura D, Carey SL, Dubey R, " Effect of Additional Weight on Upper Limb Pose During Activities of Daily Living," Biomedical Engineering Society Annual Meeting (BMES 2015), Tampa, FL October 7-10, 2015.

- Peterson M, Jongprasithporn M, Carey SL, "Evaluation of Fall Recovery and Gait Adaptation to Tripping Perturbations," Biomedical Engineering Society Annual Meeting (BMES 2015), Tampa, FL October 7-10, 2015.